Indoor Mobile AR Navigation

- 2019

- Role: UX/UI, Prototyping, 3D Animation

- Personal Project — Team: Designer, Engineer

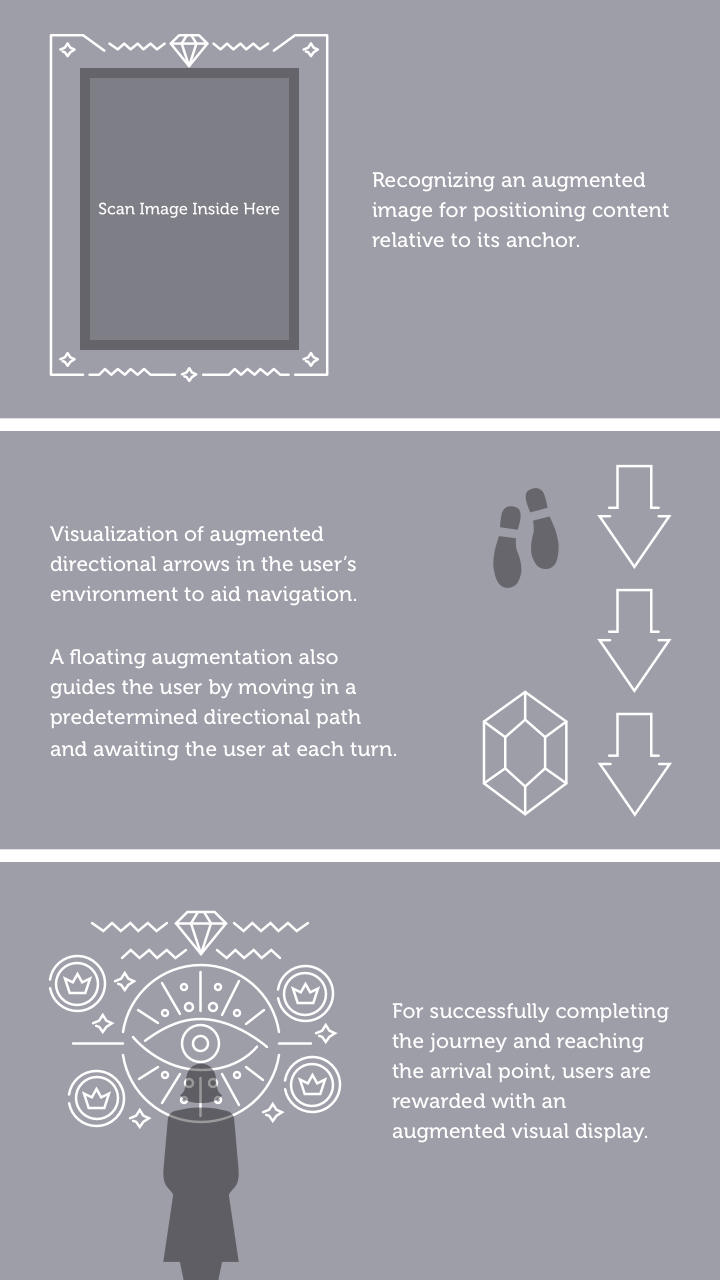

An approach to solving indoor AR navigation using an image target and a specific point of interest. The goal is to have users navigate from point A to B, accurately and efficiently, with a predetermined route.

Full end to end user experience

Experience breakdown

Concept / Idea

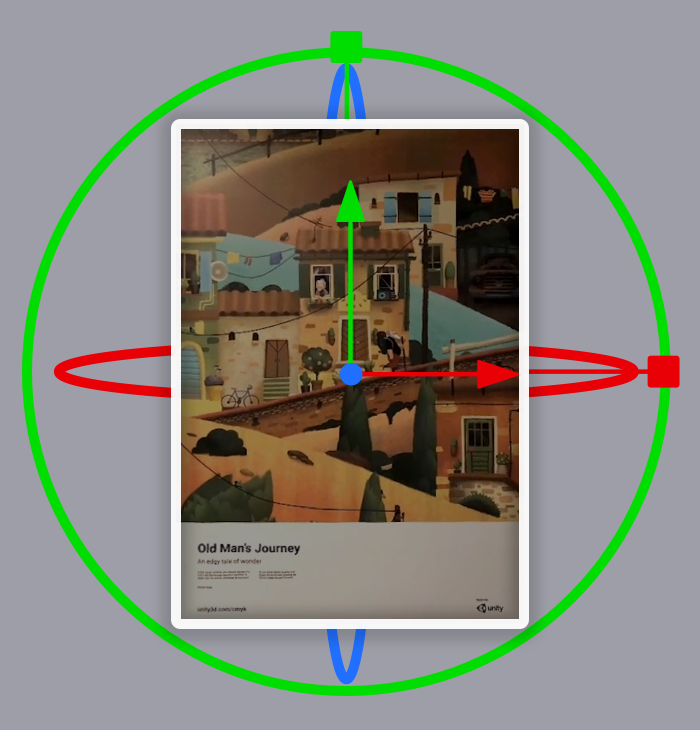

Indoor AR navigation is a hard problem because traditional location tracking with GPS signals give a broad understanding of a users position while AR needs a precise understanding of the users local position. The goal is to help users determine and maintain awareness of where they are located in a space and ascertain a path through the environment to the desired destination. My approach to solving indoor navigation involves ARCore's Augmented Images technology where your device understands where the fiducial marker is physically located in the world once you scan it. The image provides estimates for position, orientation, and physical size, which can be used by the application to understand where a user is and how far they are from a point of interest.

The chosen image marker is a poster of Old Man's Journey by Broken Games at the Unity SF Office and the POI is the lounge on the same floor. The poster contains many unique feature points and is affixed to a wall which makes it a good image to track. This image launches a scene with a path following method of way-finding using augmented 3D models.

Real world anchor of location, orientation, scale

Process / Implementation

I worked with Dan Miller, a Unity engineer, to measure the distances from the starting position (where the poster is) to the desired arrival point (the lounge) using the AR measure application. Dan used ProBuilder to recreate the main walls and pathways in Unity (where 1 Unity unit equals 1 meter).

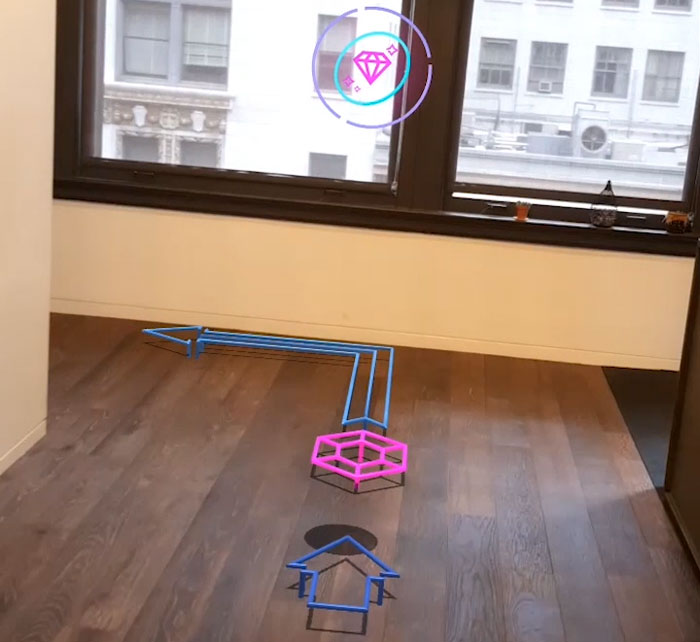

Since this project is a prototype, recreating a simplified version of the environment gave us control on how the AR content appeared and behaved without involving complex engineering. In the Unity editor, I was able to visualize the placement of the augmented directional arrows, as the user would perceive them, utilizing placeholder ProBuilder pathways. The 3D walls took several iterations to match the placement of the real walls, but this process was necessary to occlude the AR navigation content. This was important to create a good user experience as well as have the augmentation feel grounded in the real world.

Ways to improve the application to be more robust for navigating indoor spaces might include dynamically appearing arrows and content. Instead of hand placing the perceived route the user’s position could trigger arrows spawning near by and at corners to continue to guide the user in the correct direction.

Building 3D walls to occlude the augmentation

Design & Depth Perception

I designed 3D directional arrows as the main augmented content in this experience, because arrows are an affordance that users understand. Arrows are a graphic icon universally recognized for indicating direction. By overlaying 3D arrows (plus some icons of gems) on the physical floor and pointing at each turn, users inherently understand that the direction depicted by the arrows is guiding them to a physical location.

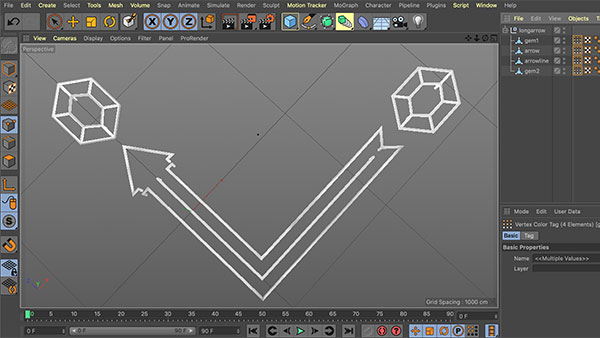

I modeled all the arrows and 3D augmented signs in Cinema4D and used the paint tool to add vertex color shading, which helps maintain the clarity of the materials. The shader was applied in Unity and an additional shader animation was applied to have the models light up occasionally to draw the user's attention and emulate neon signs.

The 3D models were designed with intention to make sure the user can accurately perceive the location at which they are placed and be guided through the experience. In order to do this I used color, shading, and shadows to give the user accurate depth cues through out the experience. Shadows particularly help make the objects feel grounded and placed on the floor. Lastly the floating orb with it's own shadow further reinforces these ideas and has a unique animation associated with it to draw the users attention.

C4D: Painted models white with a bit of shading

Interact with the 3D models exported as glTF

Cast shadows are useful in improving depth cues

Movement Based Interactions

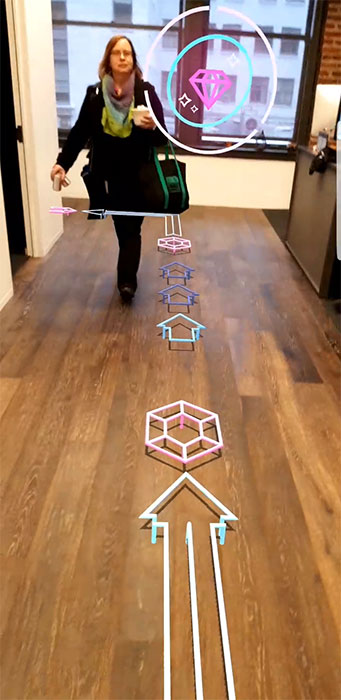

The main interaction in this application is the user's movement. From initially scanning the fiducial marker which launches the experience and places the content in the environment, to later physically moving through the space and being guided with AR arrows in order to reach the destination.

For this prototype, I designed a lot of 3D arrows and gem like symbols to lead users to the visual reward that's located in the destination. As the user reaches the lounge space, and is one meter away from the "X" sign on the floor, the 3D neon sign animates into the view. The user's proximity to the "X" sign triggers the final part of the experience.

One of the most important parts of the experience is the floating orb, which I designed to stop and wait for the user at each part of the navigation. The idea for this feature developed through the testing and iteration of the directional arrows. While the 3D arrows felt integrated in the environment and caught the user's attention, it was important that user didn't have to continually point and view content on the floor (because this means the user is not fully paying attention to their surroundings while walking). The floating orb feels like a guide that understands the user's current position and demonstrates the right direction at each turn.

Floating AR guide interacts with user's proximity

Challenges / Limitations

A lot of the initial testing of the app was done in the intended space during the evenings and weekends. When we started asking others to test our prototype during work hours, users were struggling whenever people walked through their experience. Without occlusion of people, the user's perception of the real world depth with the augmented content is subverted. To have an effective experience with AR apps like this one, users must be able to accurately perceive the AR content in the real world location.

Another limitation of the technology in its current state is the amount of drifting that occurs with the AR content, especially once users are further away from the tracked image. If drifting persists during the experience, it can ruin the user's perception of the augmented content. It’s important to ensure that virtual objects are perceived accurately in relation to the physical world because the virtual content’s meaning is tied to the environment. In this case if the direction the arrows is inaccurate it breaks the experience.

In order to improve stability and tracking, I would increase the number of image targets. For instance each wall can have a unique fiducial marker, a poster or a sign, to help users regain their position in relation the predetermined path. Another possibility is to scan and track the planes found on floors and walls to further anchor the augmented content, which makes it more stable.

Without occlusion, arrows falsely appear closer

Ways to Improve Accessibility

Here a some factors that can be considered to improve accessibility if this prototype is to built into a useable product. In this application, the floating orb is placed at the eye level for the average user (1.75 meters). This does not consider users at the extreme ends in terms of height. Instead I would position the orb to be the same height from the ground as the mobile device. I would also make sure the tracked image is a positioned at a height that is easy to reach and scan for all users.

While designing experiences that have value, it’s important to make comfort a priority. It's uncomfortable and dangerous for user's to walk around holding their phones up for long periods of time. For the full product that focuses on indoor navigation, the 3D arrows and floating guide will animate into the world only at key points in the navigation such as turns. For instance in a large open office, the arrows will be placed near image targets and entrances/exits. This way the user is encouraged to keep their device down during a majority of walking experience until they need to see the next direction. Haptic vibrations and audio (if enabled) can aid this process and let users know when to gaze back at their mobile device.

This indoor navigation app should have full knowledge on the environment that the user is in and navigating. Whether that means inputing or uploading 2D floor plans or 3D CAD models of the building, the application system will have prior information on the architecture. This will help the application react efficiently to provide users more optimized routes, like avoiding stairs if that is the user's intention.

Users at any height can view the floating guide

Guide adjusts positionally to always be visible